You should be using it before you even know how to write a line of code. Set it up, Day One.

…if you don’t have source control… Mistakes can’t be rolled back easily. … I’ve never heard of a project using source control that lost a lot of code.

Joel Spolsky, The Joel Test

It’s For Everything

With source control, you can’t lose a lot of anything that you put into it. Get everything you’re creating—code, art, documentation—under source control.

I’ve never seen an experienced professional game development team ship a project without it. Not. One. The industry as a whole couldn’t function without source control. I can’t watch game dev streams without it, in the same way I can’t watch motorcyclists ride without gear: I’ve witnessed the results first-hand.

Development is a creative, technical labor, and every feature hides countless implementations and bugs. Games multiply these risks, for many best features love to ambush your designs late in development. Games thrive on rapid failure; it reveals certainties. With development as your biggest expense, you want course-correction quick. Source control enables fearless experiment so that you can test and discover, never risk losing good work—or spent motivation—getting there.

Backups Are Not It

Don’t be fooled: backup schemes are not source control systems. Both are vital but different; backups are for disasters, while source control is for getting work done.

- Backups capture whole things—entire machines, directories, etc.—at the moment that the backup is run, ready or not

- Source control captures a detailed history of changes, each explicitly when the author is ready to save them

- Restoring individual files from backups is ludicrously painful; frequent backups makes it worse

- Restoring individual files in source control is it’s main function—it’s fucking awesome at it

Backups protect from catastrophe, like system or device failures. When something stops working or gets wrecked—a fire, a Dell BIOS update—restoring a backup gets you running again. If you are touching a backup, something went wrong.

Not Even Close

If you’re backing up manually, then you’re doing WAY too much work. But scheduled backups can also be a pain because they can run at the wrong time—preserve files in states that they you really didn’t want them to (like when you are experimenting).

Source control protects from unintended side-effects of getting shit done, defends progress and allows working at top speed.

Set up source control, then scheduled backups of the source control system.

How They Function

Generally, there are two basic models: client-server and distributed. Each has their own quirks.

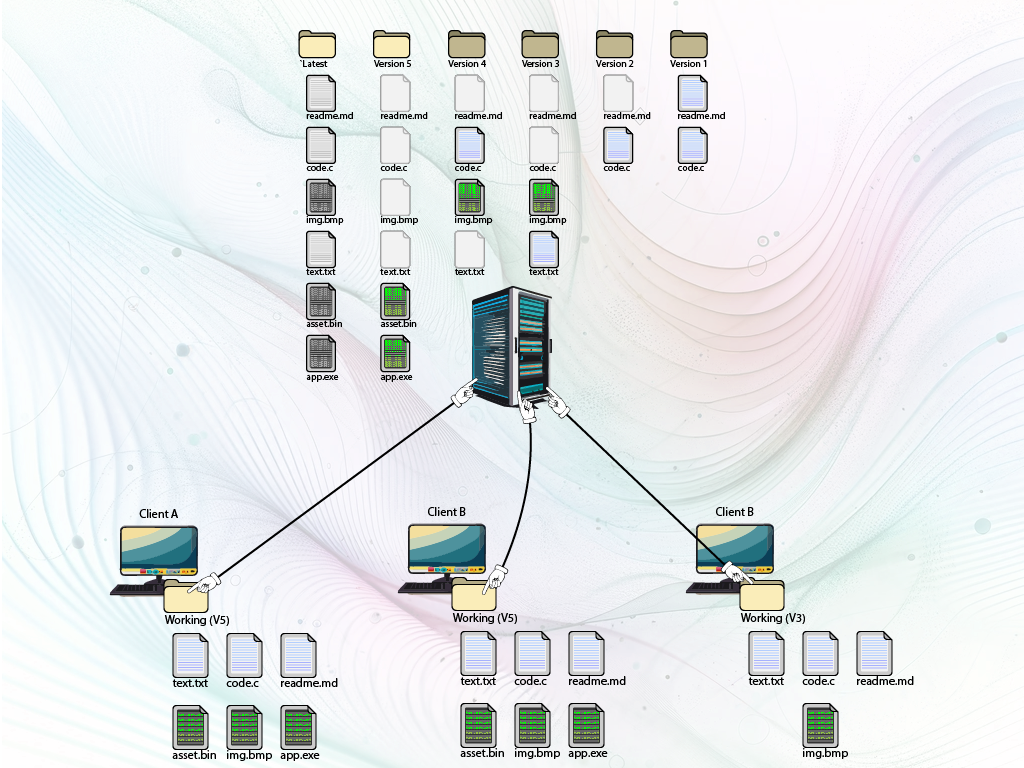

The client-server model is what the software industry grew up with. A server is boss, owning the file repository (depot) that lives on it. The server holds everything ever submitted—a full history of every version, of every submitted file. Users’ machines keep local copies of the project in any state they need—typically the latest working version. This is the sandbox in which they work.

- Clients copy folders and files from the remote depot to the local machine

- Users work on the files—some files, like binaries, are locked if only one person can edit at a time

- Changed files are committed back to the remote depot, usually with a description of the work done

- Centralized—easier to have “official” versions and enforce usage rules

- Simple—easy to understand concepts around remote and local copies, checkins and updates

- Smallest local footprint—client machines only need to have the room for one version of the project

- Server setup and administration can be complex, expensive, or both

- Depends on a network connection between client and server to do most work

- No redundancy—backups are critical to protect against server failures

In the distributed model, every machine is like its own client and server, both a strength and a weakness. Clients can work independently, and then allow others to pull their changes. There can also be a shared, centralized “main” repository (like GitHub) as the official source.

- Developers clone a “main” repository, copying the project (and its whole history) to their local machine

- Developers work in their own branches, with full control over what they change

- Commits are made locally, to these branches

- Branches are then merged (often locally) and where they can be tested

- Branches can be pushed to remote repositories, like “main”, or pulled from other clients

- Redundant—every client has the whole repository and history

- Good for large teams, most of the housekeeping work is done locally

- Less dependent on steady connectivity; work can happen offline

- Bloated—clients have the whole repository, including history; large projects eat up everyone’s drives

- Complex—cloning, branching, merging can be confusing; even veterans can get lost in it

- Less secure—clients have the whole repository, including history

- Complex client—learning how to set up and use can be confusing / mind melting

Differences and Merging

When more than one person is working on a project, there exists a possibility that a file has been changed in two different places. Some systems can prevent this, allowing files to require locking so that only one user can change it at a time. Other systems, especially distributed models, actually encourage this for some kinds of files because they expect the changed files can be merged. Binary files, like bitmaps, can’t be merged. But text-based files, like code, can be.

- Files in conflict are “diffed”—a tool compares the files, highlighting lines added, removed, or changed

- Diffed files are “merged”—users choose the lines to keep/reject, or they let automation decide

So Many Products! So Many Skus!

But which option for source control? It can depend on a lot of stuff, and often you use more than one.

Use anything that works for you.

For example, here’s a breakdown of Basic Array’s latest decision.

| App | Benefit | Problem |

|---|---|---|

| Git | Free, Ubiquitous | distributed sucks at big binaries |

| Mercurial | Like Git without the ubiquity | like Git |

| Perforce | Industry standard, Free <=5 users | self-managed is complex, Managed and over 5 users is $$$ |

| Subversion | Free <250 users, large binaries OK | self-managing is complex, Hosted/Managed is $$ |

| Unity Version Control | Plastic SCM is on par with Perforce | Unity corp == donkey balls, $$ >3 users, $$$ for >15 |

“How did it go?” you ask.

Got Git

It’s 2024 and everyone needs to know how to use Git. You should also know that Git is not GitHub. One is a version control system, the other is a popular repository hosting site (that also makes one of the best graphical interfaces for Git users).

It’s not perfect, and it’s confusing at first, but Git is superb for text-based needs (like pure code projects), it’s free, and it’s everywhere.

Like other decentralized solutions, it has issues scaling to large content-rich projects.

Any update in the binary file is registered as a complete file change in git. Git will store the entire binary in the git history instead of just storing changes. Frequent updating of these binary files makes the repository grow in size in a unwanted manner. Larger repository means slower fetch and pulls.

Ten versions of a 10MB asset? That’s a chunky 100MB on everyone’s drives. Kinda gross.

LFS attempts to minimize these problems, but then you’re back to more complicated setups for every clients AND storing files on remote servers AND separating data from records pointing at it…

For example, take Epic Games, who heavily rely on GitHub to host the Unreal Engine repository. Their internal game projects, like Fotnite, are on Perforce. So while they include a project to showcase best practices, the Lyra Sample Game, and host the source code on GitHub, guess what’s not there? The assets!

To make use of the Lyra source code, you will need to download the content from the Unreal Marketplace through the Epic Games Launcher.

Lyra Sample Game README.md

As popular as it is with platform teams, I’m not aware of any AAA projects using Git for game production. Instead, the most popular solution is…

Perforce (nka Helix Core)

Most of you will be too young to remember the early 00’s but Perforce saved game development from SourceSafe and CVS. That was back when Perforce was Perforce, long before some dorks tried to get us to call it “Helix Core”—barf! Have we all hated on Perforce at some point? Sure, it’s been beleaguered before (e.g. p4Win to p4V). But you know, its come a long way, and also we’ve seen what others have tried foisting on us.

If you’re using Unreal, and you want the best integration between the editor and your source control, Perforce is it. For some features in Unreal 5 like Unreal Game Sync, it’s the only choice.

It’s free if you need five or fewer users but you’ll have to host it yourself. Otherwise, there are a few cloud providers that run Perforce in the cloud SaaS style—super convenient, super expensive.

The good news is that, if you want to try hosting Perforce yourself, you can just start by running Helix Core server on your local machine—Windows, Mac, or Linux.

Subversion (SVN)

So it’s a bit dusty, but file versioning technology doesn’t need to be cutting edge, it needs to work. Like Perforce, SVN is client-server. It’s less featured—Perforce streams are really nice—but it is effectively FREE.

With its WebDAV compliance, Subversion allows clients to mount the repository like a shared drive, simplifying working with versioned files. The downside is that, when used in this way, those file histories and version numbers balloon, and you lose many natural sanity checks that come from requiring users to decide when a file is ready to be submitted. But it’s also easy to set up different repositories on the same server to support both asset development (auto-versioning) and project development (manual versioning) needs.

I find Subversion a little less robust, reliable, and feature rich than Perforce. But cost is a factor, SVN becomes a strong option.

I run SVN on a remote virtual private server on Ubuntu, but if you want to run the server on Windows, you can run VisualSVN Community for free. https://www.visualsvn.com/server/licensing/

Should’ve Been Plastic

Plastic SCM was great. Maybe it still is. Plastic is client-server, like Perforce, but fully hosted experience that used to have a much friendlier pricing structure than Perforce. But software success stories are like boxing movies—the greats fall, and turn into lousy bums—and that company’s story hit its Act III when Unity acquired Plastic, and was rerolled as Unity Version Control, got re-priced, and integrated into Unity DevOps… I hear it still works. And some old users were even grandfathered into their old pricing. But I’m all the way over Unity Technologies. Look, since around 2008, I’ve steered hundreds of thousands of dollars worth of revenue their way. I’ve advised companies to move their teams to the engine, some of whom would go on to be directly showcased in Unity promotional, event, and award content. But now, more than fifteen years later, I advise against anyone but my enemies to entangle themselves with that organization.

What Basic Array is Using

This in an admission, not a recommendation: Basic Array is using three systems.

- Git for custom engine work, both Unreal and Godot

- Perforce for Unreal projects

- Subversion for asset development and Godot projects

Just start with one. General consensus would be Git. The only truly wrong answer is not to use source control. Just use something. Any. Thing.

I probably won’t do any complete beginner’s guides because YouTube and search engines should have you covered. But I will keep a few posts up to date on setting up the systems here, because getting a for-real depot on a server is not always the easiest thing if you don’t know where to start.

- Setting up a Perforce Server for Unreal

- Setting up a Subversion Server (coming)

I believe in you!

GLHF—Isaac